As a contact center leader, you’re responsible for keeping operational costs down while keeping customer satisfaction high. It’s easy to see the appeal of a chatbot that can deflect phone calls, deliver near-instant answers, and assist customers 24/7.

Even a basic conversational AI bot, which follows a pre-built conversation flow based on customers’ input, can deliver some big wins to contact centers. These bots can handle straightforward questions, freeing human contact center agents up to focus on resolving more complex issues.

Chatbots also give customers a low-effort channel to ask questions and resolve basic issues. (It shouldn’t come as a surprise that 81% of customers attempt to resolve their issues through self-service channels before contacting a live agent.)

And the cost-savings of chatbots are hard to ignore. Live channels cost an average of $8.01 per contact, while self-service channels cost just $0.10.

With the introduction of generative AI, the potential benefits of chatbots are even greater. A generative AI model can tap into your company’s knowledge base, CRM, and other data sources to provide personalized, highly relevant answers to your customers, deflecting even more phone calls and live agent chats.

But that doesn’t mean you should implement a generative AI chatbot and leave it to its own devices. You need to manage your chatbot and provide ongoing training, just as you do with your human customer service agents. There are several risks to putting your customer service in the virtual hands of an AI chatbot without oversight and continuous training, including the possibility that it will share information that is false or not representative of your company, or that bad actors will manipulate it to commit fraud.

If your contact center is using a generative AI-powered chatbot or planning to roll one out soon, you’ll need to put up guardrails to reduce the risks and determine the appropriate handoff points between your virtual and live agents. A conversation intelligence platform can provide those guardrails by monitoring your chatbot and immediately notifying the appropriate teams if its responses go rogue or if a live agent needs to jump into the chat. Additionally, a conversation intelligence solution can arm you with the insights you need to train your chatbot to assist customers more effectively. For example, it can help you identify chatbot responses that reduce customer effort, reduce repeat contacts and channel switching, and mitigate churn risks–and you can use this data to fine-tune your chatbot’s performance.

Let’s take a closer look at how AI chatbots work, their risks, and how conversation intelligence can make them safer and more effective.

How do AI chatbots work?

Generative AI chatbots are built on large language models (LLMs): text-based data sets that train the chatbot to recognize language patterns and understand the context of conversations. They can be trained on open data sources (publicly available online) or a company’s owned data sets. This training data enables chatbots to process customer’s messages and respond with relevant, human-like responses.

As the chatbot ingests more customer conversations, it continues to learn and adapt its responses over time. Businesses can also fine-tune their chatbot on specific data sets to align it with their brand voice and deliver specialized knowledge to their customers.

Here are a few examples of companies using generative AI chatbots:

- Consulting firm McKinsey & Company’s chatbot, Lilli, searches a library with more than 100,000 documents and 40 knowledge sources, delivers recommended content to clients, and summarizes key points.

- Navan, formerly TripActions, has a generative AI chatbot called Ava that answers customer service questions and offers conversational travel advice and booking assistance.

- JetBlue is using a generative AI-powered chatbot in their contact center to field customer service queries. Their contact center reports that the chatbot is saving 73,000 hours per quarter and giving their agents more time to assist customers with complex issues.

What are the risks of AI chatbots?

For all the benefits of AI chatbots, there are risks that are giving many organizations pause–especially those in financial services, healthcare, utilities, and other regulated industries.

- Sharing inaccurate information (with confidence). Generative AI models are prone to hallucinations–outputs that might sound convincing on the surface but that are inaccurate or illogical. For instance, a credit union bot might offer members loans or interest rates that aren’t available. Or a chatbot for a financial services firm might offer recommendations that haven’t been vetted by a qualified advisor. Hallucinations like these pose a huge compliance risk in regulated industries and could lead to fines, legal fees, and reputational damage.

- Incorporating harmful biases. There’s a risk that a generative AI model will pick up on subtle, implicit biases in its training data and incorporate those biases into its responses, potentially causing it to treat customers differently based on factors like race or gender.

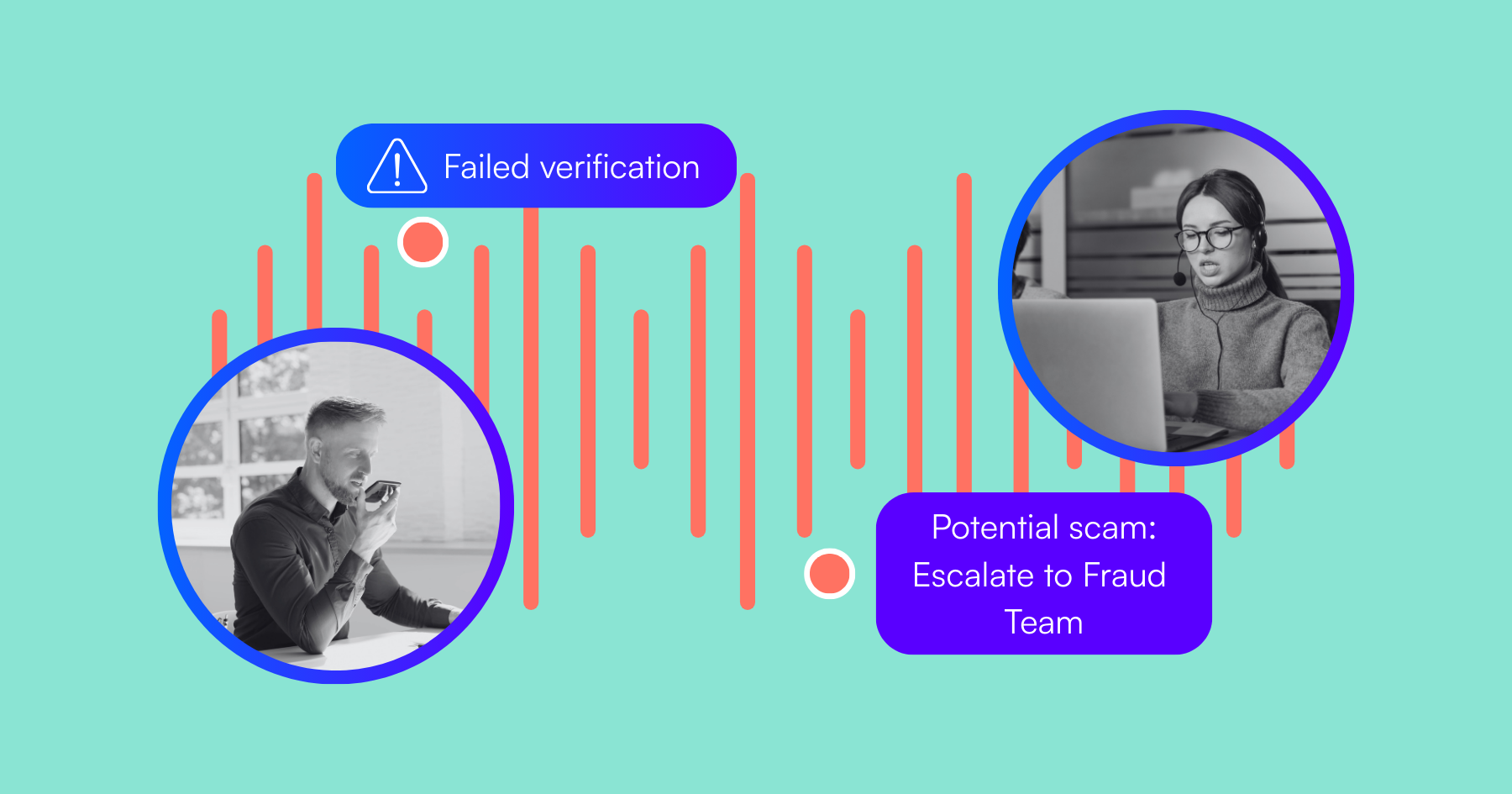

- Opening the door to security threats and fraud. While generative AI chatbots are relatively new, cyber attackers are already finding ways to manipulate them. For example, it may be possible for an attacker to alter open data sources that a chatbot is trained on to get it to share a link containing malware or spread false information.

How conversation intelligence keeps chatbots on track

So do the AI chatbot risks outweigh the benefits?

Not necessarily.

While it’s important not to rush into implementing an AI chatbot before considering the potential risks and vulnerabilities, it is possible to be successful with proper precautions.

One of the most important things to keep in mind with a generative AI chatbot is that it will still require human oversight. For organizations fielding a large volume of chat conversations, the challenge becomes monitoring every interaction without negating the time-saving benefits of the chatbot.

That’s where a conversation intelligence solution can help. Conversation intelligence, or conversation analytics, uses machine learning to organize and transform unstructured conversation data into meaningful information that businesses can use.

Your business can integrate a conversation intelligence platform with your AI chatbot and use it to monitor the chatbot’s outputs. Essentially, it enables you to “listen” to the chatbot at scale, catch any instances of unapproved responses, and bring in your human agents when appropriate.

Here’s how you can use conversation intelligence to manage AI chatbot risks:

1. Train your conversation intelligence platform on what you DON’T want your chatbot to say.

Conversation intelligence software can process phrases in customer conversations and organize them based on their meaning, helping businesses quickly understand what went well–or didn’t–without requiring employees to listen to every phone call or read every chat transcript. For example, our conversation intelligence platform, Creovai, can recognize thousands of phrases that indicate a customer is frustrated and flag points of frustration in conversations so businesses can identify areas for improvement.

If you’re planning to use a generative AI chatbot, you can train your conversation intelligence platform on phrases you never want your chatbot to use. For instance, if you manage the contact center for a credit union and are concerned about the chatbot offering members non-existent loans, you could train your conversation intelligence platform to flag when the phrase “I can offer” is used near the word “loan.”

It wouldn’t be practical to manually build a list of every phrase you want to ban from your chatbot or attempt to predict all the different ways the chatbot could go rogue, but fortunately, you don’t have to. Because a conversation intelligence platform is built on machine learning models, it will continue to train on the conversation data available, meaning it will become increasingly fine-tuned to the language your business wants your chatbot to avoid.

2. Send automated notifications to the appropriate teams when one of those “DON’T say” phrases occurs.

Once you’ve set your conversation intelligence platform up to flag “rogue” chatbot statements, it’s time to take action. You can configure automated notifications to go to the appropriate people (such as your legal or data science teams) whenever one of these statements occurs so that they can ensure customers aren’t getting inaccurate information and adjust the chatbot’s parameters with additional training data to prevent the issue from happening again.

Contact centers in highly regulated industries may want to take additional precautions and have human agents review chatbot outputs before pushing them to the customer. This still allows the contact center to operate more efficiently than it could with human agents alone: the chatbot generates a near-instant response based on the customer’s input, and the agent simply reviews and approves it. Using a conversation intelligence platform to flag problematic statements makes it easy for agents to quickly see which chatbot outputs need a closer look and potential editing before going to the customer.

Final takeaways

Generative AI chatbots can be powerful tools for assisting customers more efficiently, reducing contact center operational costs, and improving the overall customer experience. However, they should be viewed as helpful assistants–not advisors that you trust to represent your company without supervision.

If you plan to implement a generative AI, you need to be responsible about how you deploy it. Human oversight can help you manage AI chatbot risks, and conversation intelligence enables you to have that oversight at scale.

.png)